Summer School 2025

The mission of the IAIFI PhD Summer School is to leverage the expertise of IAIFI researchers, affiliates, and partners toward promoting education and workforce development.

- August 4–8, 2025

- Harvard, Cambridge, MA

The Summer School was followed by the IAIFI Summer Workshop, which is open to researchers of all career stages.

Agenda Lecturers Tutorial Leads Costs Sponsors

About

The Institute for Artificial Intelligence and Fundamental Interactions (IAIFI) is enabling physics discoveries and advancing foundational AI through the development of novel AI approaches that incorporate first principles, best practices, and domain knowledge from fundamental physics. The Summer School will include lectures and events that illustrate interdisciplinary research at the intersection AI and Physics, and encourage global networking. Hands-on code-based tutorials that build on foundational lecture materials help students put theory into practice, and a hackathon project provides an opportunity for students to collaborate and apply what they’ve learned.

Costs

- There is no registration fee for the Summer School. Costs of dorm accommodations will be reimbursed by IAIFI, contingent upon attendance. Students for the Summer School are expected to cover the cost of travel.

- Lunch each day, as well as coffee and snacks at breaks, will be provided during the Summer School, along with at least one dinner during the Summer School.

- Students who wish to stay for the IAIFI Summer Workshop will be able to book the same rooms through the weekend and the Workshop if they choose (at their own expense).

Lecturers

Topic: Reinforcement Learning

Lecturer: Sasha Rakhlin, Professor, MIT

Topic: Domain Shift Problem: Building Robust AI Models with Domain Adaptation

Lecturer: Aleksandra Ćiprajanović, Wilson Fellow Associate Scientist, Fermilab

Topic: Physics-Motivated Optimization

Lecturer: Gaia Grosso, IAIFI Fellow

Topic: Representation/Manifold Learning: Geometric Deep Learning

Lecturer: SueYeon Chung, Assistant Professor of Neural Science, NYU

Tutorial Leads

Topic: Reinforcement Learning

Tutorial Lead: Margalit Glasgow, Postdoc, MIT

Topic: Robust/Interpretable AI: Domain Adaptation

Tutorial Lead: Sneh Pandya, PhD Student, Northeastern/IAIFI

Topic: Physics-Motivated Optimization: Simulation Intelligence

Tutorial Lead: Sean Benevedes, PhD Student, MIT and Jigyasa Nigam, Postdoc, MIT

Topic: Representation/Manifold Learning: Geometric Deep Learning

Tutorial Lead: Sam Bright-Thonney, IAIFI Fellow

Agenda

Agenda is subject to change.

Monday, August 4, 2025

9:00–9:30 am ET

Welcome/Introduction

9:30 am–12:00 pm ET

Lecture 1: Elements of Interactive Decision Making (Sasha Rakhlin, MIT)

Machine learning methods are increasingly deployed in interactive

environments, ranging from dynamic treatment strategies in medicine to

fine-tuning of LLMs using reinforcement learning. In these settings,

the learning agent interacts with the environment to collect data and

necessarily faces an exploration-exploitation dilemma.

In this lecture, we’ll begin with multi-armed bandits, progressing

through structured and contextual bandits. We’ll then move on to

reinforcement learning and broader decision-making frameworks,

outlining the key algorithmic approaches and statistical principles

that underpin each setting. Our goal is to develop both a rigorous

understanding of the learning guarantees and a toolbox of fundamental

algorithms.

Resources:

12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 1: Elements of Interactive Decision Making (Margalit Glasgow)

3:30–4:30 pm ET

Hackathon Introduction

5:00–5:30 pm ET

Break

5:30–7:30 pm ET

Welcome Dinner

Tuesday, August 4, 2025

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 2: Physics-Guided Optimizations: Inform and Inspire AI Models with Physics Principles (Gaia Grosso, IAIFI Fellow)

This lecture presents key concepts and techniques for designing machine learning models both informed by and inspired by physics principles. In the first part, we explore ways to inform AI models about physics constraints and data properties. We examine the highly structured nature of data in the physical sciences—such as images, point clouds, and time series—and discuss how their geometric and physical properties shape effective machine learning approaches. We then cover practical strategies for incorporating physical constraints through loss functions, regularization, and inductive biases. In the second part, we highlight how physical principles can inspire the design of optimization methods and AI architectures, introducing concepts like annealing, diffusion, entropy, and energy-based models, and showing examples of their growing role in modern machine learning.

Resources:

- An Introduction to Physics-Guided Deep Learning

- Energy Based Models

- The Role of Momentum in ML Optimization

- Entropy Regularization

- Hamiltonian Neural Networks

- Physics Informed Neural Networks

- New Frontiers for Hopfield Networks

- Diffusion Models

12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 2: Physics-guided Optimizations: Inform and Inspire AI Models with Physics Principles (Sean Benevedes, MIT and Jigyasa Nigam, MIT)

3:30–4:30 pm ET

Breakout Sessions with Lecturers and Tutorial Leads

4:30–6:00 pm ET

Group work for hackathon

Wednesday, August 6, 2025

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 3: Computing with Neural Manifolds: A Multi-Scale Framework for Understanding Biological and Artificial Neural Networks (SueYeon Chung, Harvard)

Recent breakthroughs in experimental neuroscience and machine learning have opened new frontiers in understanding the computational principles governing neural circuits and artificial neural networks (ANNs). Both biological and artificial systems exhibit an astonishing degree of orchestrated information processing capabilities across multiple scales - from the microscopic responses of individual neurons to the emergent macroscopic phenomena of cognition and task functions. At the mesoscopic scale, the structures of neuron population activities manifest themselves as neural representations. Neural computation can be viewed as a series of transformations of these representations through various processing stages of the brain. The primary focus of my lab’s research is to develop theories of neural representations that describe the principles of neural coding and, importantly, capture the complex structure of real data from both biological and artificial systems.

In this lecture, I will present three related approaches that leverage techniques from statistical physics, machine learning, and geometry to study the multi-scale nature of neural computation. First, I will introduce new statistical mechanical theories that connect geometric structures that arise from neural responses (i.e., neural manifolds) to the efficiency of neural representations in implementing a task. Second, I will employ these theories to analyze how these representations evolve across scales, shaped by the properties of single neurons and the transformations across distinct brain regions. Finally, I will show how these insights extend efficient coding principles beyond early sensory stages, linking representational geometry to efficient task implementations. This framework not only help interpret and compare models of brain data but also offers a principled approach to designing ANN models for higher-level vision. This perspective opens new opportunities for using neuroscience-inspired principles to guide the development of intelligent systems.

Resources:

- Chung, S., Lee, D.D. and Sompolinsky, H., 2018. Classification and geometry of general perceptual manifolds. Physical Review X, 8(3), p.031003.

- Cohen, U., Chung, S., Lee, D.D. and Sompolinsky, H., 2020. Separability and geometry of object manifolds in deep neural networks. Nature communications, 11(1), p.746.

- Chung, S. and Abbott, L.F., 2021. Neural population geometry: An approach for understanding biological and artificial neural networks. Current opinion in neurobiology, 70, pp.137-144.

- Yerxa, T., Kuang, Y., Simoncelli, E. and Chung, S., 2023. Learning efficient coding of natural images with maximum manifold capacity representations. Advances in Neural Information Processing Systems, 36, pp.24103-24128.

12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 3: Computing with Neural Manifolds: A Multi-Scale Framework for Understanding Biological and Artificial Neural Networks (Sam Bright-Thonney, IAIFI Fellow)

3:30–4:30 pm ET

Career Panel

- Aleksandra Ciprijanovic, Wilson Fellow Associate Scientist, Fermilab

- Gaia Grosso, IAIFI Fellow

- Trevor McCourt, CTO, Extropic

- Matthew Rispoli, Quantitative Researcher, PDT Partners

- Nashwan Sabti, Senior Research Engineer, Soroco

4:30–5:30 pm ET

Break

5:30–6:30 pm ET

Presentation by Boris Hanin (Princeton University) sponsored by PDT Partners

6:30–8:00 pm ET

Networking event with IAIFI sponsored by PDT Partners

Thursday, August 7, 2025

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 4: Domain Shift Problem: Building Robust AI Models with Domain Adaptation (Aleksandra Ciprajanovic, Fermilab)

Artificial Intelligence (AI) is revolutionizing physics research—from probing the large-scale structure of the Universe to modeling subatomic interactions and fundamental forces. Yet, a major challenge persists: AI models trained on simulations or old experiment / astronomical survey often perform poorly when applied to new data—exposing issues of dataset (domain) shift, model robustness, and uncertainty in predictions. This summer school session will introduce students to common challenges in applying AI across domains and present solutions based on domain adaptation—a set of techniques designed to improve model generalization under domain shift. We will cover foundational ideas, practical strategies, and current research frontiers in this area. Through examples in astrophysics, we’ll explore how domain adaptation can help bridge the gap between synthetic and real-world data, improve trust in model outputs, and advance scientific discovery. The concepts discussed are broadly applicable across physics and other scientific disciplines, making this a valuable topic for anyone interested in building robust, transferable AI models for science.

Resources:

12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 4: Domain Shift Problem: Building Robust AI Models with Domain Adaptation (Sneh Pandya, Northeastern)

3:30–4:30 pm ET

Breakout Sessions with Lecturers and Tutorial Leads

4:30–5:30 pm ET

Group work for hackathon

Friday, August 9, 2024

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Hackathon

Projects

Project details to come.12:00–1:00 pm ET

Lunch

1:00–3:00 pm ET

Hackathon

Projects

Project details to come.3:00–3:45 pm ET

Hackathon presentations

3:45–4:00 pm ET

Closing

Financial Supporters

The Summer School is funded primarily by support from the National Science Foundation under Cooperative Agreement PHY-2019786. Computing resources are provided by the NSF ACCESS program.

We extend a sincere thank you to the following financial supporters of this year’s IAIFI Summer School:

If you are interested in supporting future IAIFI Summer Schools, email iaifi-summer@mit.edu

2025 Organizing Committee

- Fabian Ruehle, Chair (Northeastern University)

- Bill Freeman (MIT)

- Cora Dvorkin (Harvard)

- Thomas Harvey (IAIFI Fellow)

- Sam Bright-Thonney (IAIFI Fellow)

- Sneh Pandya (Northeastern)

- Yidi Qi (Northeastern)

- Manos Theodosis (Harvard)

- Marshall Taylor (MIT)

- Marisa LaFleur (IAIFI Project Manager)

- Thomas Bradford (IAIFI Project Coordinator)

Summer School 2024

The mission of the IAIFI PhD Summer School is to leverage the expertise of IAIFI researchers, affiliates, and partners toward promoting education and workforce development.

- August 5–9, 2024

- MIT, Stata Center (32 Vassar Street, Cambridge, MA), Room 155

Agenda Lecturers Tutorial Leads Sponsors

About

The Institute for Artificial Intelligence and Fundamental Interactions (IAIFI) is enabling physics discoveries and advancing foundational AI through the development of novel AI approaches that incorporate first principles, best practices, and domain knowledge from fundamental physics. The Summer School will include lectures and events that AI + Physics, illustrate interdisciplinary research at the intersection AI and Physics, and encourage diverse global networking. Hands-on code-based tutorials that build on foundational lecture materials help students put theory into practice.

Lecturers

Topic: Representation/Manifold Learning

Lecturer: Melanie Weber, Assistant Professor of Applied Mathematics and of Computer Science, Harvard

Topic: Uncertainty Quantification/Simulation-Based Inference

Lecturer: Carol Cuesta-Lazaro, IAIFI Fellow

Topic: Physics-Motivated Optimization

Lecturer: Cengiz Pehlevan, Assistant Professor of Applied Mathematics & Kempner Institute Associate Faculty, Harvard

Topic: Generative Models

Lecturer: Gilles Louppe, Professor, University of Liège

Tutorial Leads

Topic: Representation/Manifold Learning

Tutorial Lead: Sokratis Trifinopoulos (with Thomas Harvey, Incoming IAIFI Fellow)

Topic: Uncertainty Quantification/Simulation-Based Inference

Tutorial Lead: Jessie Micallef, IAIFI Fellow

Topic: Physics-Motivated Optimization

Tutorial Lead: Alex Atanasov, PhD Student, Harvard

Topic: Generative Modeling

Tutorial Lead: Gaia Grosso, IAIFI Fellow

Agenda

This agenda is subject to change.

Monday, August 5, 2024

9:00–9:30 am ET

Welcome/Introduction

9:30 am–12:00 pm ET

Lecture 1: Deep generative models: A latent variable model perspective, Gilles Louppe

Abstract

Deep generative models are probabilistic models that can be used as simulators of the data. They are used to generate samples, perform inference, or encode complex priors. In this lecture, we will review the principles of deep generative models from the unified perspective of latent variable models, covering variational auto-encoders, diffusion models, latent diffusion models, and normalizing flows. We will discuss the principles of variational inference, the training of generative models, and the interpretation of the latent space. Selected applications from scientific domains will also be presented.12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 1: Deep generative models: A latent variable model perspective, Gaia Grosso

3:30–4:30 pm ET

Introduction to Quantum Reservoir Learning with QuEra, Pedro Lopes and Milan Kornjača

Abstract

Quantum machine learning has gained considerable attention as quantum technology advances, presenting a promising approach for efficiently learning complex data patterns. Despite this promise, most contemporary quantum methods require significant resources for variational parameter optimization and face issues with vanishing gradients, leading to experiments that are either limited in scale or lack potential for quantum advantage. To address this, we develop a general-purpose, gradient-free, and scalable quantum reservoir learning algorithm that harnesses the quantum dynamics of QuEra's Aquila to process data. Quantum reservoir learning on Aquila, achieves competitive performance across various categories of machine learning tasks, including binary and multi-class classification, as well as time series prediction. The QuEra team performed successful quantum machine leaning demonstration on up to 108 qubits, demonstrating the largest quantum machine learning experiment to date. We also observe comparative quantum kernel advantage in learning tasks by constructing synthetic datasets based on the geometric differences between generated quantum and classical data kernels. In this presentation we will cover the general methods utilized to run quantum reservoir computing in QuEra's neutral-atom analog hardware, providing an introduction for users to pursue new research directions.5:00–7:00 pm ET

Welcome Dinner

Tuesday, August 6, 2024

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 2: Geometric Machine Learning, Melanie Weber

Abstract

A recent surge of interest in exploiting geometric structure in data and models in machine learning has motivated the design of a range of geometric algorithms and architectures. This lecture will give an overview of this emerging research area and its mathematical foundation. We will cover topics at the intersection of Geometry and Machine Learning, including relevant tools from differential geometry and group theory, geometric representation learning, graph machine learning, and geometric deep learning.12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 2: Geometric Machine Learning, Sokratis Trifinopoulos

3:30–4:30 pm ET

Breakout Sessions with Days 1 and 2 Lecturers and Tutorial Leads

4:30–6:00 pm ET

Group work for hackathon

Wednesday, August 7, 2024

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 3: Scaling and renormalization in high-dimensional regression, Cengiz Pehlevan

Abstract

From benign overfitting in overparameterized models to rich power-law scalings in performance, simple ridge regression displays surprising behaviors sometimes thought to be limited to deep neural networks. This balance of phenomenological richness with analytical tractability makes ridge regression the model system of choice in high-dimensional machine learning. In this set of lectures, I will present a unifying perspective on recent results on ridge regression using the basic tools of random matrix theory and free probability, aimed at researchers with backgrounds in physics and deep learning. I will highlight the fact that statistical fluctuations in empirical covariance matrices can be absorbed into a renormalization of the ridge parameter. This “deterministic equivalence” allows us to obtain analytic formulas for the training and generalization errors in a few lines of algebra by leveraging the properties of the S-transform of free probability. From these precise asymptotics, we can easily identify sources of power-law scaling in model performance. In all models, the S-transform corresponds to the train-test generalization gap, and yields an analogue of the generalized-cross-validation estimator.12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 3: Physics-motivated optimization: Scaling and renormalization in high-dimensional regression, Alex Atanasov

3:30–4:30 pm ET

Career Panel

-

Moderator: Alex Gagliano, IAIFI Fellow

-

Carol Cuesta-Lazaro, IAIFI Fellow

-

Pedro Lopes, Quantum Advocate, QuEra Computing

-

Gilles Louppe, Professor, University of Liège

-

Anton Mazurenko, Researcher, PDT Partners

-

Cengiz Pehlevan, Assistant Professor of Applied Mathematics & Kempner Institute Associate Faculty, Harvard

-

Partha Saha, Distinguished Engineer, Data and AI Platform, Visa

-

Melanie Weber, Assistant Professor of Applied Mathematics and of Computer Science, Harvard

Thursday, August 8, 2024

4:30–5:30 pm ET

Networking Reception sponsored by PDT Partners

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 4: Simulation-Based Inference for Modern Scientific Discovery, Carol Cuesta-Lazaro

Abstract

In this lecture, we will explore the foundations and applications of simulation-based inference (SBI) methods, demonstrating their efficiency in solving inverse problems across scientific disciplines. We'll introduce key approaches to SBI, including Neural Likelihood Estimation (NLE), Neural Posterior Estimation (NPE), and Neural Ratio Estimation (NRE), while highlighting their connections to data compression, density estimation, and generative models. Throughout the lecture, we'll address practical considerations such as computational efficiency and scalability to high-dimensional problems. We'll also examine recent advancements in the field, such as sequential methods and differentiable calibration, empowering attendees with the knowledge to adapt and customize these algorithms to their specific research challenges and constraints.12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 4: Uncertainty Quantification, Jessie Micallef

3:30–4:30 pm ET

Breakout Sessions with Days 3, 4, and 5 Lecturers and Tutorial Leads (Optional)

4:30–5:30 pm ET

Group work for hackathon

5:30–10:00 pm ET

Social Event with IAIFI members

Details

5:30–7:00 pm ET: Picnic with IAIFI members7:00–10:00 pm ET: Movie Night with MIT OpenSpace, The Imitation Game

Friday, August 9, 2024

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Hackathon

Projects

Project details to come.12:00–1:00 pm ET

Lunch

1:00–2:30 pm ET

Hackathon

Projects

Project details to come.2:30–3:30 pm ET

Hackathon presentations

3:30–4:00 pm ET

Closing

Financial Supporters

The Summer School is funded primarily by support from the National Science Foundation under Cooperative Agreement PHY-2019786. Computing resources are provided by the NSF ACCESS program.

We extend a sincere thank you to the following financial supporters of this year’s IAIFI Summer School:

We are also grateful to Foundry for providing compute as a prize for the hackathon.

2024 Organizing Committee

- Fabian Ruehle, Chair (Northeastern University)

- Demba Ba (Harvard)

- Alex Gagliano (IAIFI Fellow)

- Di Luo (IAIFI Fellow)

- Polina Abratenko (Tufts)

- Owen Dugan (MIT)

- Sneh Pandya (Northeastern)

- Yidi Qi (Northeastern)

- Manos Theodosis (Harvard)

- Sokratis Trifinopoulos (MIT)

Summer School 2023

The mission of the IAIFI PhD Summer School is to leverage the expertise of IAIFI researchers, affiliates, and partners toward promoting education and workforce development.

- August 7–11, 2023

- Northeastern University, Interdisciplinary Science and Engineering Complex

- Applications for the 2023 Summer School are now closed

Agenda Lecturers Tutorial LeadsAccommodations Costs Sponsors FAQ Past Schools

About

The Institute for Artificial Intelligence and Fundamental Interactions (IAIFI) is enabling physics discoveries and advancing foundational AI through the development of novel AI approaches that incorporate first principles, best practices, and domain knowledge from fundamental physics. The Summer School included lectures and events that exemplify ab initio AI, illustrate interdisciplinary research at the intersection AI and Physics, and encourage diverse global networking. Hands-on code-based tutorials that build on foundational lecture materials helped students put theory into practice.

Accommodations

Students for the Summer School had the option to reserve dorm rooms (expenses paid by IAIFI thanks to generous financial support from partners) at Boston University.

Boston University Housing, 10 Buick St, Boston, MA 02215

Costs

- There was no registration fee for the Summer School. Students for the Summer School were expected to cover the cost of travel.

- Lunch each day, as well as coffee and snacks at breaks, were provided during the Summer School, along with a dinner during the Summer School.

- Students who wished to stay for the IAIFI Summer Workshop were able to book the same rooms through the weekend and the Workshop if they chose (at their own expense).

Lecturers

Tutorial Leads

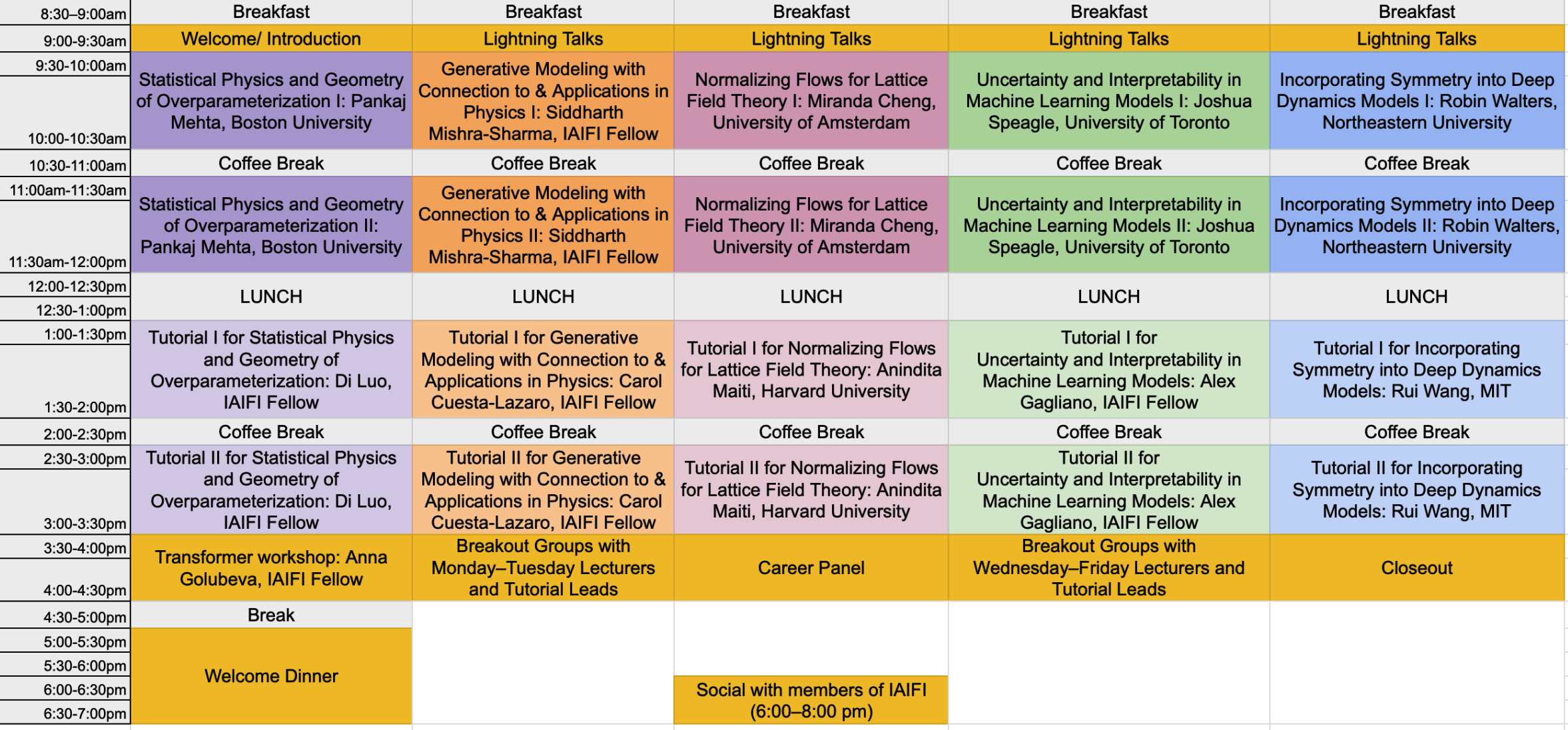

Agenda

Monday, August 7, 2023

9:00–9:30 am ET

Welcome/Introduction

9:30 am–12:00 pm ET

Lecture 1: Statistical Physics and Geometry of Overparameterization, Pankaj Mehta

Abstract

Modern machine learning often employs overparameterized statistical models with many more parameters than training data points. In this talk, I will review recent work from our group on such models, emphasizing intuitions centered on the bias-variance tradeoff and a new geoemetric picture for overparameterized regression.12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 1: Statistical Physics and Geometry of Overparameterization, Di Luo

3:30–4:30 pm ET

Transformer Workshop, Anna Golubeva (Optional)

5:00–7:00 pm ET

Welcome Dinner

Tuesday, August 8, 2023

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 2: Generative modeling, with connection to and applications in physics, Siddharth Mishra-Sharma

Abstract

I will give a pedagogical tour of several popular generative modeling algorithms including variational autoencoders, normalizing flows, and diffusion models, emphasizing connections to physics where appropriate. The approach will be a conceptual and unifying one, highlighting relationships between different methods and formulations, as well as connections to neighboring concepts like neural compression and latent-variable modeling.12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 2: Generative modeling, with connection to and applications in physics, Carolina Cuesta-Lazaro

3:30–4:30 pm ET

Breakout Sessions with Days 1 and 2 Lecturers and Tutorial Leads (Optional)

Wednesday, August 9, 2023

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 3: Normalizing Flows for Lattice Field Theory, Miranda Cheng

Abstract

Normalizing flows are powerful generative models in machine learning. Lattice field theories are indispensable as a computation framework for non-perturbative quantum field theories. In lattice field theory one needs to generative sample field configurations in order to compute physical observables. In this lecture I will survey the different normalizing flow architectures and discuss how they can be exploited in lattice field theory computations.12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 3: Normalizing Flows for Lattice Field Theory, Anindita Maiti

3:30–4:30 pm ET

Career Panel, Panelists TBA (Optional)

6:00–8:00 pm ET

Social Event with IAIFI members

Thursday, August 10, 2023

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 4: Uncertainty and Interpretability in Machine Learning Models, Joshua Speagle

Abstract

In science, we are often concerned with not just whether our ML model performs well, but on understanding how robust our results are, how to interpret them, and what we might be learning, especially in the presence of observational uncertainties. I will provide an overview of various approaches to help address these challenges in both specific and general settings.12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 4: Uncertainty and Interpretability in Machine Learning Models, Alex Gagliano

3:30–4:30 pm ET

Breakout Sessions with Days 3, 4, and 5 Lecturers and Tutorial Leads (Optional)

Friday, August 11, 2023

9:00–9:30 am ET

Lightning Talks

9:30 am–12:00 pm ET

Lecture 5: Incorporating Symmetry into Deep Dynamics Models, Robin Walters

Abstract

Given a mathematical model of a dynamical system, we can extract the relevant symmetries and use them to build equivariant neural networks constrained by these symmetries. This results in better generalization and physical fidelity. In these lectures, we will learn how to follow this procedure for different types of systems such as fluid mechanics, radar modeling, and robotic manipulation and across different data modalities such as point clouds, images, and meshes.12:00–1:00 pm ET

Lunch

1:00–3:30 pm ET

Tutorial 5: Incorporating Symmetry into Deep Dynamics Models, Rui Wang

3:30–4:00 pm ET

Closing

Financial Supporters

We extend a sincere thank you to the following financial supporters of the 2023 IAIFI Summer School:

Northeastern University sponsors include: Office of the Provost, College of Science, department of Physics, and Khoury College of Computer Sciences.

2023 Organizing Committee

- Jim Halverson, Chair (Northeastern University)

- Shuchin Aeron (Tufts)

- Denis Boyda (IAIFI Fellow)

- Anna Golubeva (IAIFI Fellow)

- Ouail Kitouni (MIT)

- Nayantara Mudur (Harvard)

- Sneh Pandya (Northeastern)

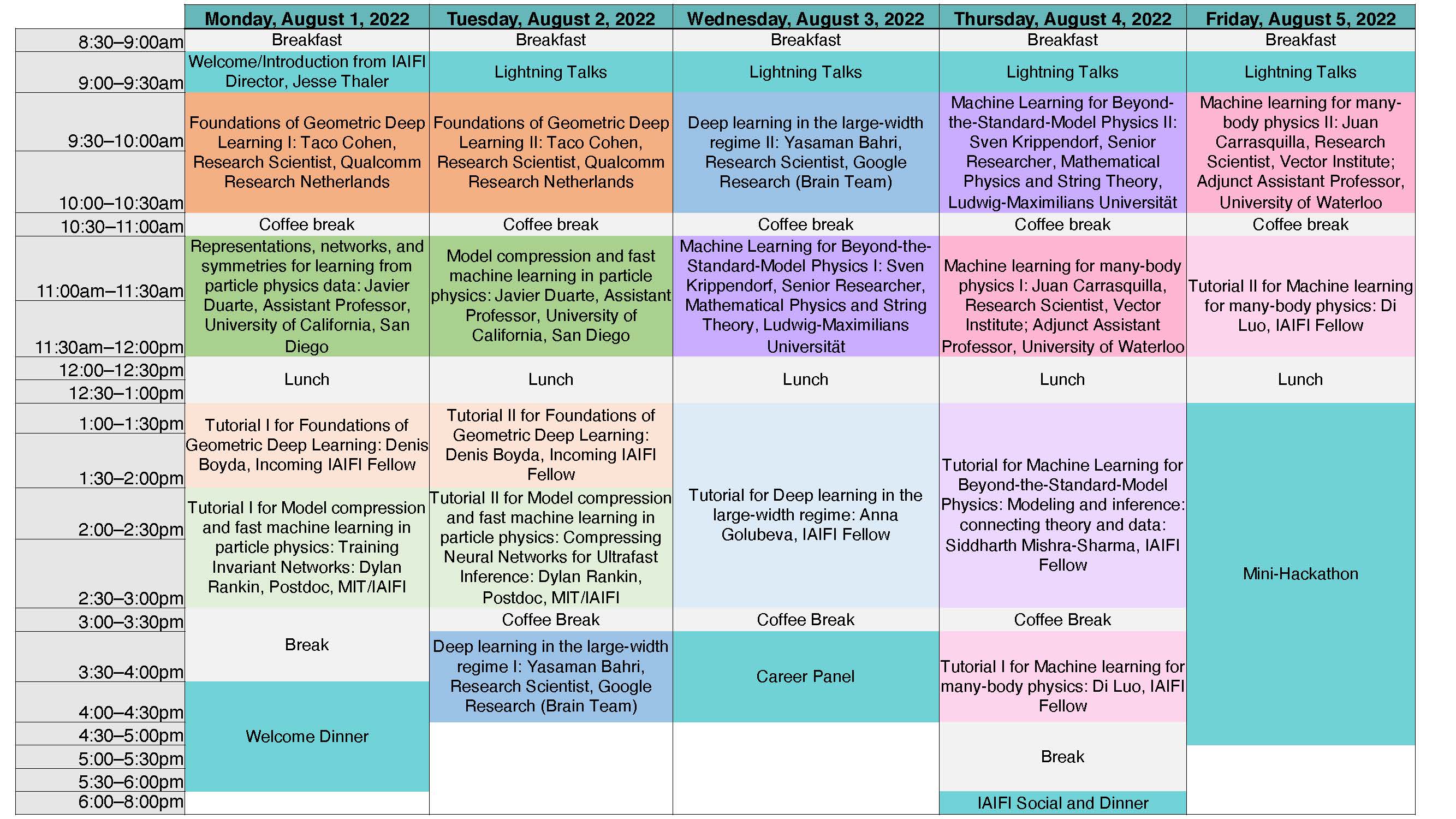

Summer School 2022

The first annual IAIFI PhD Summer School was held at Tufts University August 1—August 5, 2022, followed by the IAIFI Summer Workshop August 8—August 9, 2022.

Our first annual Summer School was held hybrid over 5 days, with ~85 attendees in person from over 9 different countries.

Summer School Agenda

View the detailed agenda for the IAIFI Summer School

View the complete Summer School program

Lecturers

Financial Supporters

We extend a sincere thank you to the following financial supporters of the first IAIFI Summer School:

2022 Organizing Committee

- Jim Halverson, Chair (Northeastern University)

- Tess Smidt (MIT)

- Taritree Wongjirad (Tufts)

- Anna Golubeva (IAIFI Fellow)

- Dylan Rankin (MIT)

- Jeffrey Lazar (Harvard)

- Peter Lu (MIT)

FAQ

- Who can apply to the Summer School? Any PhD students or early career researchers working at the intersection of physics and AI may apply to the summer school.

- What is the cost to attend the Summer School? There is no registration fee for the Summer School. Students for the Summer School are expected to cover the cost of travel and boarding.

- Is there funding available to support my attendance at the Summer School? IAIFI is covering the cost of the Summer School other than travel and lodging.

- If I come to the Summer School, can I also attend the Workshop? Yes! We encourage you to stay for the Workshop and will cover the cost of your registration if you attend both the Summer School and Workshop in person.

- Will the recordings of the lectures be available? We expect to share recordings of the lectures after the Summer School.

- Will there be an option for virtual attendance? We will determine whether virtual options will be provided based on interest.